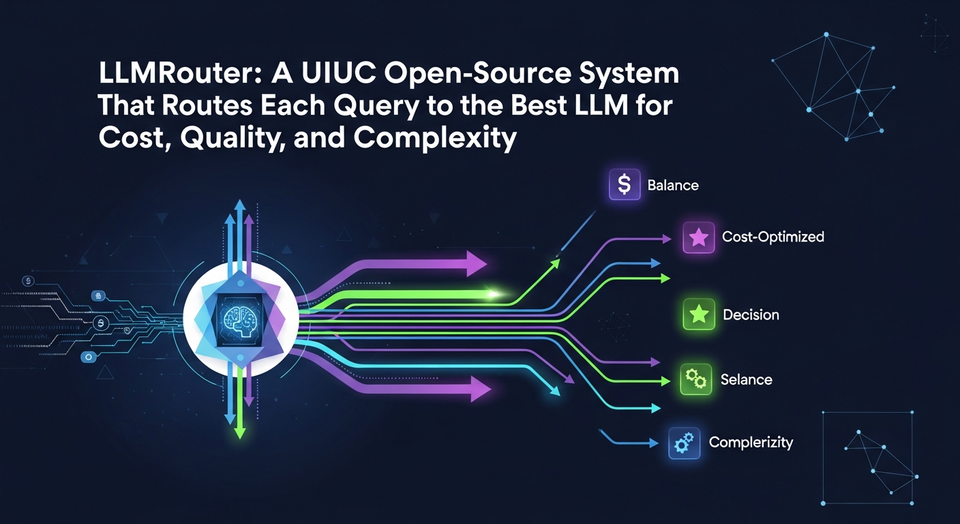

Choosing the “right” large language model (LLM) for every prompt is becoming a practical engineering problem, not just a research question. Teams often run multiple models—some cheaper and faster, others more capable and expensive—and the challenge is deciding, query by query, which model to call to hit quality targets without overspending. LLMRouter is designed to make that decision systematic: it inserts a routing layer between your application and a pool of LLMs, selecting the most suitable model for each request based on complexity, cost constraints, and desired output quality.

Built by the U Lab at the University of Illinois Urbana Champaign, LLMRouter is an open-source routing library that treats model selection as a first-class system concern. It provides a unified Python API and command-line interface (CLI), supports a broad set of routing approaches, and includes tooling to generate training/evaluation data for routers across standard benchmarks. The project also ships with a plugin system, making it possible to swap in custom routing logic without rewriting the surrounding infrastructure.

What LLMRouter is and what it aims to solve

In many LLM deployments, “model selection” happens informally—through hard-coded rules, scattered scripts, or manual decisions. LLMRouter formalizes this as a routing problem: given a user query (and optionally context), the system predicts which model in a heterogeneous pool should handle it. The choice is made with explicit attention to:

- Task complexity (how hard the query is likely to be)

- Quality targets (how accurate or well-formed the answer must be)

- Cost considerations (token usage, pricing, and budget constraints)

LLMRouter “sits between” applications and multiple LLM endpoints, exposing routing through a consistent interface rather than requiring teams to build and maintain custom glue code for each model and strategy.

Router families and supported models

LLMRouter groups its routing algorithms into four families: Single-Round Routers, Multi-Round Routers, Personalized Routers, and Agentic Routers. Across these categories, the project includes more than 16 routing models and baselines, all designed to be configured and executed through the same API/CLI patterns.

Single-Round Routers

Single-round routing focuses on making a decision in one step: given the query (and possibly metadata), pick the model to call. LLMRouter’s single-round lineup includes:

knnroutersvmroutermlproutermfrouterelorouterrouterdcautomixhybrid_llmgraphroutercausallm_router- Baselines:

smallest_llmandlargest_llm

These routers cover a wide range of strategies, including k-nearest neighbors, support vector machines, multilayer perceptrons, matrix factorization, Elo rating-based approaches, dual contrastive learning, automatic model mixing, and graph-based routing. The inclusion of simple baselines (always choose the smallest or the largest model) makes it easier to measure whether more sophisticated routing actually improves cost/performance trade-offs in real workloads.

Multi-Round Routers and Router R1 (router_r1)

Some tasks are better handled when routing is allowed to be iterative—especially when the system may need to call one model, reflect, and then call another. LLMRouter supports this via router_r1, a pre-trained instance of Router R1 integrated into the library.

Router R1 frames multi-LLM routing and aggregation as a sequential decision process. In this setup, the router itself is an LLM that alternates between internal reasoning steps and external model calls. Training uses reinforcement learning with a rule-based reward intended to balance:

- Format (whether outputs follow required structure)

- Outcome (task success/quality)

- Cost (resource usage)

In LLMRouter, router_r1 is distributed as an extra installation target with pinned dependencies that have been tested on vllm==0.6.3 and torch==2.4.0.

Personalized Routers with GMTRouter (gmtrouter)

LLMRouter also addresses personalization—routing that adapts to individual user preferences rather than optimizing only for global averages. This is handled by gmtrouter, described as a graph-based personalized router with user preference learning.

GMTRouter models multi-turn user-LLM interactions as a heterogeneous graph that includes users, queries, responses, and models. It then applies a message passing architecture to infer user-specific routing preferences from few-shot interaction data. Reported experimental results show accuracy and AUC improvements over non-personalized baselines, with up to around 21% accuracy gains and substantial AUC gains.

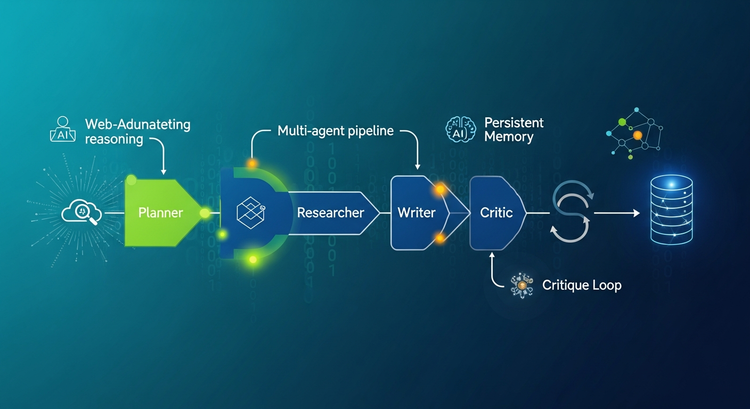

Agentic Routers for multi-step workflows

For more complex reasoning workflows, LLMRouter includes “agentic” routing options—routers that can operate over multi-step traces and coordinate multiple calls. Two agentic routers highlighted in the project are:

knnmultiroundrouter, which applies k-nearest neighbor reasoning over multi-turn traces and targets complex tasksllmmultiroundrouter, an LLM-based agentic router that performs multi-step routing without its own training loop

Importantly, these agentic routers use the same configuration and data formats as the other router families, and can be swapped in via a single CLI flag—reducing friction when experimenting with different routing paradigms.

Data generation pipeline for routing datasets

Effective routing often depends on having the right training and evaluation data: you need examples of queries, candidate model responses, and scored outcomes so a router can learn which models perform best under which conditions. LLMRouter includes an end-to-end data generation pipeline that converts standard benchmarks and LLM outputs into routing datasets.

The pipeline supports 11 benchmarks:

- Natural QA

- Trivia QA

- MMLU

- GPQA

- MBPP

- HumanEval

- GSM8K

- CommonsenseQA

- MATH

- OpenBookQA

- ARC Challenge

It runs through three explicit stages:

- Stage 1: dataset extraction and splitting —

data_generation.pypulls queries and ground-truth labels and produces training/testing JSONL splits. - Stage 2: candidate model embeddings —

generate_llm_embeddings.pycreates embeddings for candidate LLMs using metadata. - Stage 3: API calling and evaluation —

api_calling_evaluation.pycalls LLM APIs, evaluates responses, and merges scores with embeddings to produce routing records.

The outputs include query files, LLM embedding JSON, query embedding tensors, and routing data JSONL files. Each routing record can contain fields such as task_name, query, ground_truth, metric, model_name, response, performance, embedding_id, and token_num. Configuration is handled entirely through YAML, allowing engineers to repoint the pipeline to new datasets and candidate model lists without modifying the code.

Interactive chat interface

For hands-on testing, LLMRouter offers an interactive mode via llmrouter chat, which launches a Gradio-based chat UI over any router and configuration. The server can bind to a custom host and port, and can also expose a public sharing link.

The chat tool includes query modes that affect how routing interprets context:

current_only: routing sees only the most recent user messagefull_context: routing uses the concatenated dialogue historyretrieval: routing augments the query with the top k similar historical queries

As users interact, the UI shows model choices in real time. Because it runs on the same router configuration used for batch inference, teams can use the chat interface as a practical debugging surface for production-style configurations.

Plugin system for custom routers

LLMRouter’s plugin architecture is aimed at teams that want to add organization-specific routing logic while keeping the library’s surrounding tooling intact. Custom routers live under custom_routers, subclass MetaRouter, and implement route_single and route_batch.

Configuration files in that directory specify data paths, hyperparameters, and optional default API endpoints. Plugin discovery scans multiple locations:

- The project’s

custom_routersfolder - A

~/.llmrouter/pluginsdirectory - Any extra paths set via the

LLMROUTER_PLUGINSenvironment variable

The project includes examples such as randomrouter (selects a model at random) and thresholdrouter (a trainable router that estimates query difficulty). These examples illustrate both ends of the spectrum: from simple randomized selection used for testing to learning-based approaches intended to approximate “difficulty-aware” model choice.

Why LLM routing matters for real-world LLM deployments

As LLM ecosystems mature, organizations increasingly operate “model portfolios” rather than relying on a single provider or model size. In that environment, routing becomes a concrete lever for controlling:

- Spend: avoiding unnecessary calls to the most expensive model

- Latency: sending easy questions to faster models

- Reliability: using multi-round or agentic strategies for difficult tasks

- User experience: personalizing model selection based on user-specific preferences and history

LLMRouter’s approach—standardizing router families, providing a unified interface, and offering a benchmark-driven dataset pipeline—targets the operational reality that “which model should answer?” is often as important as “what is the answer?”

Conclusion

LLMRouter packages LLM model selection into a dedicated, extensible system layer, combining multiple router families, a structured data generation pipeline across 11 benchmarks, and tooling for experimentation via CLI and a Gradio chat UI. For teams running multiple LLMs, it offers a practical framework to optimize inference by balancing quality and cost on a per-query basis.

Related Articles

- How to Build a Privacy-Preserving Federated Fraud Detection System with Lightweight PyTorch (and OpenAI-Assisted Reporting)

- The Cybersecurity Stories That Defined 2025: Hacks, Surveillance, and High-Stakes Reporting

- Best Smart Glasses to Buy in 2025: Top Picks for Everyday Use, Work, Sports, and XR Gaming

Based on reporting originally published by www.marktechpost.com. See the sources section below.

Sources

- www.marktechpost.com

- https://github.com/ulab-uiuc/LLMRouter

- https://ulab-uiuc.github.io/LLMRouter/

- https://x.com/intent/follow?screen_name=marktechpost

- https://www.reddit.com/r/machinelearningnews/

- https://www.aidevsignals.com/

- https://t.me/machinelearningresearchnews